Overview

This SRM featured three pairs of problems with easy and hard versions: FoxHandle, CoinsGame, and SpellCards. The two CoinsGame problems could be solved with graph search, and the two SpellCards problems could be solved with dynamic programming. Notably, the harder version of SpellCards reduced to a trivial application of knapsack, but proving this reduction was tricky and most coders used a more obvious, but longer, dynamic programming solution to solve it. In fact, the author himself stated in this forum post that he did not know about the knapsack solution until after the contest.

FoxAndHandleEasy

This is a useful exercise in using the substr() function. Let \( n \) be the length of \( s \). Iterate over all length-\( n \) substrings of \( t \). Let this substring be called \( u \). If \( u \) is equal to \( s \) and if the string formed by removing \( u \) from \( t \) is also equal to \( s \), then return true.

CoinsGameEasy

There are several approaches which work. The simplest one is probably just to brute force all possible moves. The following is pseudocode for a recursive solution which implements that idea:

// xi, yi are the coordinates of coin i.

// steps is the number of steps the number of moves that we have made so far.

rec( x0, y0, x1, y1, steps )

{

if( ( x0', y0' is off the grid ) && ( x1', y1' is off the grid ) )

return INFINITY;

if( ( x0', y0' is off the grid ) || ( x1', y1' is off the grid ) )

return steps;

if( steps == 10 ) return INFINITY;

ans = INFINITY;

for each direction d

let x0', y0' = move in direction d from x0, y0;

let x1', y1' = move in direction d from x1, y1;

ans = min( ans, rec( x0', y0', x1', y1', steps + 1 ) );

return ans;

}

The recursion depth is at most 10, and each recursive call branches 4 times, so the function is called at most \( 4^{10} \approx 1 \text{ million } \) times. This is fast enough to pass on TopCoder.

Alternatively, you could use breadth-first search or some more fancy algorithms, but they aren't necessary in this case.

SpellCardsEasy

Let's start with a simple solution, figure out why it doesn't work, and then improve on it. Suppose we have the following recurrence:

f( i )

{

if( i == n ) return 0;

// use card

use = 0;

if( i + lvl[i] <= n ) ans = dmg[i] + f( i + lvl[i] );

// don't use card

no_use = f( i + 1 );

return max( use, no_use );

}

This seems simple enough: We look at each card \( i \) and decide whether to use it or not. If we use it, then we remove cards \( i, i + 1, \ldots, i + lvl_i - 1 \), and then recursively proceed from there. The problem, unfortunately, is that the cards we decide to remove may not necessarily be contiguous. Consider the following example:

\[

lvl = [ 2, 1, 1 ] \\

dmg = [ 100, 1, 0 ]

\]

Here it's optimal to first use middle card, then use the first card, dealing a total of 101 damage. Our algorithm, on the other hand, would return 100 because it uses the first card, and then discards the first two cards.

Suppose we keep a count of cards that need to be removed, but we don't remove them right away*. Call this value \( need \). Now, examining each card from left to right, we can do the following:

- Don't use the card. This decreases our current \( need \) value by 1.

- Use the card. This increases the damage we do by \( dmg_i \) and increases \( need \) by \( lvl_i \).

Claim: If, after examining all the cards, we have \( need = 0 \), then the cards we chose to use are a valid combination.

Proof: Suppose the rightmost card that we decided to use was card \( i \). When we processed card \( i \), \( need = k \) for some \( k \), and afterwards, we had \( need = k + lvl_i \). Since we managed to decrement \( need \) to 0 by the time we processed all the cards, then there must be at least \( k + lvl_i - 1 \) cards to the right of \( i \). Now let's use card \( i \) and then remove cards \( i, i + 1, \ldots, i + lvl_i - 1 \) from the deck. This new deck also satisfies the \( need = 0 \) property. That is, if you look at all the cards we decided to use and increment or decrement \( need \), then you still get to \( need = 0 \) after examining all the cards. We can then inductively apply the above process. Use the rightmost card, and then remove it, along with some cards to the right of it. Eventually we will have used all the cards we chose originally without breaking any rules of the game.

Based on our above observation, we can write a new recurrence relation:

f( i, need )

{

if( i == n ) return 0;

// use card i

use = 0;

if( need + lvl[i] <= n ) use = dmg[i] + f( i + 1, need + lvl[i] - 1 );

// don't use card i

no_use = f( i + 1, max( need - 1, 0 ) )

return max( use, no_use )

}

Now we just need to use dynamic programming or memoisation to make sure that each recursive call gets evaluated at most once. The complexity of this algorithm is \( O( N^2 ) \) for \( N \) cards, which is more than fast enough for the time limit.

FoxAndHandle

There are several approaches to solve this problem. In this tutorial, I'll go over a simple semi-greedy approach. In general, problems that require a 'lexicographically least' solution suggest some sort of smart greedy solution, but this is not always the case.

We begin with a simple algorithm and fill in the blanks. The algorithm below keeps track of the position of the last character we used, and at each step, searches all subsequent characters and picks the 'best' one:

f( s )

{

ans = "";

last = 0;

for t from 0 to length(s) / 2

{

best = last;

for i from last to length(s)

if (we can append s[i] to ans) && (s[i] < s[best])

best = i;

ans += s[best];

last = best;

}

}

The only missing piece is determining whether, at each step, we can append some \( s_i \) to \( ans \). As it turns out, the following condition is sufficient:

Let \( cnt( c, str ) \) denote the number of occurrences of \( c \) in some string \( str \). Then we can append \( s_i \) to \( ans \) if and only if for all \( c \), \[ cnt( c, ans ) + cnt( c, s_{ i, i + 1, \ldots, n - 1 } ) \geq cnt( c, s ) / 2 \]The rationale for the above condition is the following. Let's examine each character that we have already added to \( ans \) (i.e. \( cnt( c, ans ) \)), as well as each character which we could potentially add to \( ans \) in the future (i.e. \( cnt( c, s_{ i, i + 1, \ldots, n - 1 } ) \) ). In order for us to produce a valid string in the end, these two numbers together must be at least equal to the number of characters we need in the final string, which is \( cnt( c, s ) / 2 \). This is both necessary and sufficient for \( s_i \) to be a valid addition to \( ans \).

Once we have the above condition, the pseudocode now looks like this:

f( s )

{

ans = "";

last = 0;

for t from 0 to length(s) / 2

{

best = last;

for i from last to length(s)

{

ok = true;

for c from 'a' to 'z'

if cnt( c, ans ) + cnt( c, s.substr( i ) ) < cnt( c, s ) / 2

ok = false;

if ok && s[i] < s[best]

best = i;

}

ans += s[best];

last = best;

}

}

SpellCards

We can solve this problem in a similar manner as we solved its division 2 version. All we have to do is to rotate the string, and compute the same recurrence relation as above. However, there is a more nifty solution that's trickier to see, but much, much easier to code. It turns out that SpellCards is equivalent to the knapsack problem, where each object \( i \) has weight \( lvl_i \) and value \( dmg_i \).

Proof: It suffices to show that a set of cards \( S = \{ x_1, x_2, \ldots, x_m \} \) can be played if and only if \[ \sum_{ x \in S } lvl_x \leq n \] One direction is clear -- all solutions to SpellCards must also be solutions to knapsack. For the converse, assume the above inequality holds for some set of cards \( S = \{ x_1, x_2, \ldots, x_m \} \).

Claim: There must be at least one card \( x_i \) that we can use without removing any other cards in \( S \) in the process.

Proof of claim: Assume the contrary -- that for each \( x_i \), there is some other \( x_j \) within the range \( [ x_i + 1, \ldots, x_i + lvl_{x_i} - 1 ] \) modulo \( n \). Then we have a collection of intervals \( [ x_i, x_i + 1, \ldots, x_i + lvl_{x_i} - 1 ] \) which not only cover all the cards, but also overlap. This implies that \[ \sum_{ x \in S } lvl_x > n \] which contradicts the assumption. Thus, there must be at least one card which we can use.

Given the claim, for every knapsack-satisfying set \( S = \{ x_1, \ldots, x_m \} \), we can use some \( x_i \in S \) without removing any of the other \( x_j \). This leaves us with \( n - lvl_{x_i} \) cards total. Furthermore, \[ \sum_{ x \in S \setminus \{ x_i \} } lvl_x = \left[ \sum_{ x \in S } lvl_x \right] - lvl_{x_i} \leq n - lvl_{x_i} \] I.e., after using \( x_i \), the set \( S \setminus \{ x_i \} \) still satisfies the knapsack inequality with the new set of cards. By induction, we can use all the cards if the initial set \( S \) satisfies the inequality. This completes the proof: we can solve SpellCards by simply applying a knapsack dynamic program with weights \( lvl_i \) and value \( dmg_i \).

CoinsGame

The notion of an equivalence relation is a very useful tool from basic mathematics which can help us solve this problem. Equivalence relations allow us to define relationships between arbitrary subsets of a particular set \( S \) in terms of binary relations between individual elements, in effect turning \( O( 2^{|S|} ) \) amount of data into \( O( |S|^2 ) \).

In this problem, we would like to consider the following relation: for two given non-obstacle squares in the grid \( a \) and \( b \), place coins on both \( a \) and \( b \). We say \( a \sim b \) (or \( a \) is similar to \( b \)) if every sequence of moves that causes \( a \)'s coin to fall off also causes \( b \)'s coin to fall off, and vice versa. I.e., the starting configuration consisting of coins on \( a \) and \( b \) is not a good configuration. (The proof that this is an equivalence relation is left to the reader.)

Now suppose that from the above relation, we have equivalence classes \( C_1, C_2, \ldots, C_m \) that partition \( S \), the set of non-obstacle squares. By the way we defined the relation, an initial configuration is good if and only if it contains some squares in at least two classes. Then the total number of good configurations is the number of configurations containing only squares in one class, namely \[ 2^{|S|} - 1 - \sum_{i} \left( 2^{|C_i|} - 1 \right) \]

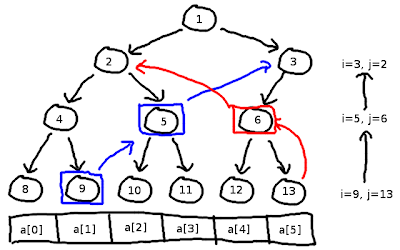

It remains to determine how we actually compute the equivalence classes. We do this by computing which pairs \( a, b \) are not similar, i.e. there exists some sequence of moves which causes \( a \)'s coin to fall off, but not \( b \)'s, or vice versa. It turns out that we can do this with a simple breath-first search (DFS will overflow the stack). Let \( prev( s, d ) \) denote the set of all squares \( t \) such that moving in direction \( d \) from \( t \) takes you to \( s \). Then the following algorithm computes whether or not pairs of squares are distinct:

boolean distinct[N][N]; listThis algorithm runs in \( O( |S|^2 ) \); since \( S \leq 1600 \), this will complete in under the time limit.q; for( square s0 ) for( square s1 ) { distinct[s0][s1] = false; for( each direction d ) { let s0' = move in direction d from s0; let s1' = move in direction d from s1; if( ( s0' is off the board ) ^ ( s1' is off the board ) ) distinct[s0][s1] = true; } if( distinct[s0][s1] ) q.add( s0, s1 ) } while( q is not empty ) { s0, s1 = q.pop(); for( each direction d ) for( t0 in prev( s0, d ) ) for( t1 in prev( s1, d ) ) { if( !distinct[t0][t1] ) { distinct[t0][t1] = true; q.add( t0, t1 ); } } }

Once we have computed which pairs are distinct, it is quite simple to figure out the equivalence classes and then compute the final answer.

Footnotes

- This is a very standard technique in designing amortised algorithms: you keep track of the 'costs' you incur, and then you figure out how to 'distribute' them optimally later. Resizing ArrayLists or vectors is a classic example of a commonly used amortised algorithm.